Latest organic search news – October 25

We’ve compiled the essential updates from Google and the broader world of search from the last month – keeping you up to date with everything you need to know.

TL;DR

- Google disables the &num=100 parameter, impacting SERP analysis, rank tracking, and reducing Search Console impressions.

- ChatGPT launches Instant Checkout with Etsy and Shopify, shifting AI functionality from solely research into purchase.

- Early evidence of llms-full.txt being accessed by ChatGPT suggests LLMs may soon start respecting structured publisher instructions.

- Despite AI’s rise, Google remains the biggest traffic driver, though referrals are down ~13.5% since January. Sector and regional differences mean no one-size-fits-all strategy.

- Authentic experts in PR are under the spotlight, with credibility now critical for E-E-A-T.

- Media redundancies are reshaping the outlet landscape, with journalist-owned ventures on Beehiiv and Substack growing.

Google disables &num=100 parameter (and impressions drop off a cliff)

One of the biggest stories this month is Google disabling the &num=100 parameter, which many SEOs and tools relied on to view and analyse the first 100 results in a single SERP.

About a year ago, Google removed the option to easily view 100 results in the SERP. For those of us who spend time analysing beyond page one, a workaround was to use the &num=100 parameter. Personally, my most-clicked bookmarklet forced this view, so its removal is a big change.

Why this matters for SEOs

The results on page two and beyond are where opportunities and competitive insight lie. Losing the ability to quickly scan these results makes analysis more time consuming and less accurate.

Impact on rank tracking

For the rank tracking industry, costs have increased significantly. Tools that previously pulled 100 results in one request now need to run ten requests to cover the same ground. Many have responded by reducing the depth or frequency of updates.

At Distinctly we have seen the following from our tool stack:

- SEOmonitor still pulls the top 20 daily and the rest weekly, so core reporting isn’t heavily impacted.

- Ahrefs has always been more directional for rankings, so little change there.

- SEO API data is still available, but more expensive to run.

- Share of voice benchmarks remain unaffected as we focus on the top 10 primarily.

Search Console effects

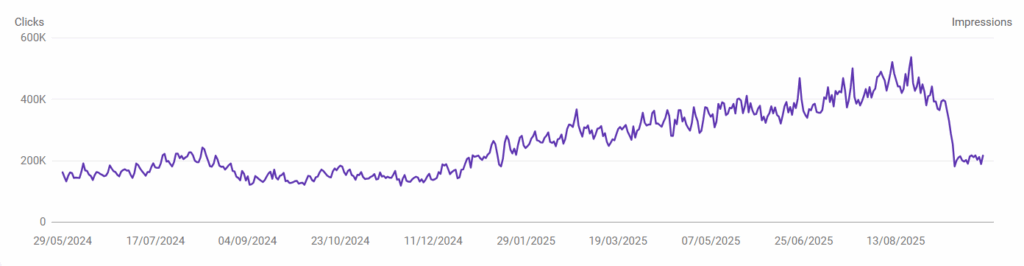

The change coincided with a noticeable drop in impressions within Google Search Console, especially on desktop, while average positions improved. This suggests that bot activity from scrapers and rank tracking tools was inflating impression data. Many of us have used that data to inform strategy and report to stakeholders, so comparisons with historical data will now be more difficult and come with more caveats than usual.

Why Google has made this change

The main consensus here is that it’s simply to reduce large-scale scraping, particularly by AI crawlers.

Paid impact

Some PPC managers have noticed small anomalies in Google Ads impression reporting, though overall the effect appears limited compared to organic. The primary disruption is on the SEO side, where both practitioners and tools have had to adjust workflows.

Overall view

If you’ve seen a sudden drop in impressions in your Search Console reports this month, don’t be alarmed. This isn’t a sign that your visibility has collapsed, it’s more of a re-adjustment. The inflated impression numbers from bot activity are no longer being counted, so what you’re seeing now is a cleaner, more accurate baseline.

With the huge rises in impression figures over the past six months, largely driven by the continued rollout of AI Overviews, it’s been a bumpy ride for anyone trying to make sense of the data Google gives us. This change should stabilise reporting and will likely remain the new norm.

For most SEOs, accurate data below position 50 is not critical. Approximations at that level are usually fine. But for the top positions, reliable data is essential. This update makes it harder to get a full competitive picture, but it does not affect the most important reporting and benchmarking we provide, which focuses on page-one performance.

ChatGPT moves into e-commerce with Instant Checkout

OpenAI has launched Instant Checkout in ChatGPT, starting with Etsy and with Shopify integration “coming soon.” U.S. users can now buy products directly inside ChatGPT, with Stripe powering payments. Merchants on Etsy or Shopify are auto-enrolled; others will need to set up a product feed and connect checkout through Stripe. For now, it’s limited to single-item purchases, but multi-item carts and international rollout are expected to follow.

From research to transaction

ChatGPT has quickly become part of the product research process for many users. Adding checkout closes the gap between research and purchase: once you trust the recommendation, you can buy it instantly. How quickly users embrace this will vary. Many will be comfortable making a low-cost, repeat purchase, I imagine, while others will be more cautious about letting AI handle a financial transaction of note.

Adoption will depend on factors like risk appetite and technophobia, but it marks the next step in how people use ChatGPT – moving from researching products to actually buying them.

What this means for merchants

- Shopify advantage: For anyone in e-commerce considering a site refresh or migration, this integration is another string to Shopify’s bow. Shopify merchants are automatically included, but this is not exclusive to Shopify, it is just a lot simpler if you are on the platform.

- Etsy angle: Etsy was chosen as the first launch partner, likely because of its large, diverse catalogue and modern API/payment setup. That means Etsy sellers are included from day one, but it does not guarantee greater visibility in ChatGPT. Ranking is still based on factors like price, quality, availability and data completeness. Shopify merchants will have the same auto-enrolment advantage once their integration goes live.

Data quality is the new moat

As Josh Blyskal from Profound pointed out, optimising for ChatGPT shopping isn’t the same as optimising for Google Shopping. There are no keyword fields or custom labels here, ChatGPT breaks a query down across structured data fields like [product_category], [description], [price] and [delivery_estimate].

So when a user asks something like “I need waterproof trail running shoes under £150 that arrive by Friday”, the system is pulling against those fields, not keyword matching in the traditional sense. That makes completeness of product data critical. Optional fields like [material], [weight], [shipping], [return_window] and [review_count] all increase the chances of your product being the right fit.

The [description] field, with its 5,000-character limit, is especially important. It’s effectively the knowledge base for your product, the context ChatGPT uses to answer long-tail, multi-factor queries.

The reality is, most brands won’t bother to fill all of this out properly. That creates an advantage for those who do: investing in clean, detailed product data will directly improve visibility in ChatGPT shopping results.

Opportunities and limitations

This is still an emerging market and adoption will take time, but the opportunity is significant for brands who move early and invest in product data hygiene. There is a first-mover advantage, too, for those with healthy feeds.

Overall view

AI shopping is at the very beginning of its evolution. Instant Checkout is the first step towards a more intent-driven, agentic shopping model, where assistants do not just recommend products but complete transactions. It is too soon to know how consumer behaviour will shift, but for e-commerce brands, this could be the first step to something that changes the game. If you are already on Shopify or Etsy, you will be part of the rollout by default. If you are considering a platform migration or debating where to list your products, this adds further weight to those ecosystems.

Early signs of llms.txt in the wild?

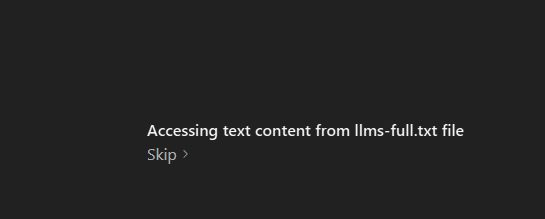

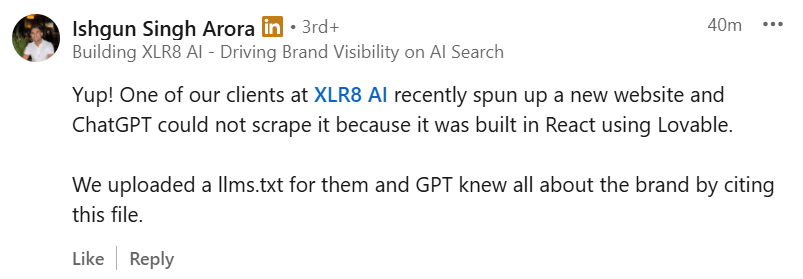

A new screenshot shared on LinkedIn by Aimee Jurenka shows ChatGPT accessing content from an llms-full.txt file.

There has been plenty of discussion in the industry around llms.txt files; whether they are genuinely useful, whether crawlers are respecting them, or if they are just a placeholder until an “official” protocol emerges. What makes this screenshot interesting is that it references llms-full.txt, something not seen before.

This is not verified or confirmed by OpenAI, but it is the first visible sign of GPT potentially pulling data from such a file. If true, it would mark an important step towards LLMs respecting structured publisher instructions in a way similar to robots.txt.

As always, information is only as good as how you use it. Testing with llms.txt is something we plan to explore more over the coming month.

The bull case for Google and traditional SEO

After two very interesting AI updates, it’s worth taking a step back. Rand Fishkin’s recent LinkedIn video was a good reminder that while AI assistants are getting a lot of attention, Google is still by far the biggest driver of referral traffic to websites.

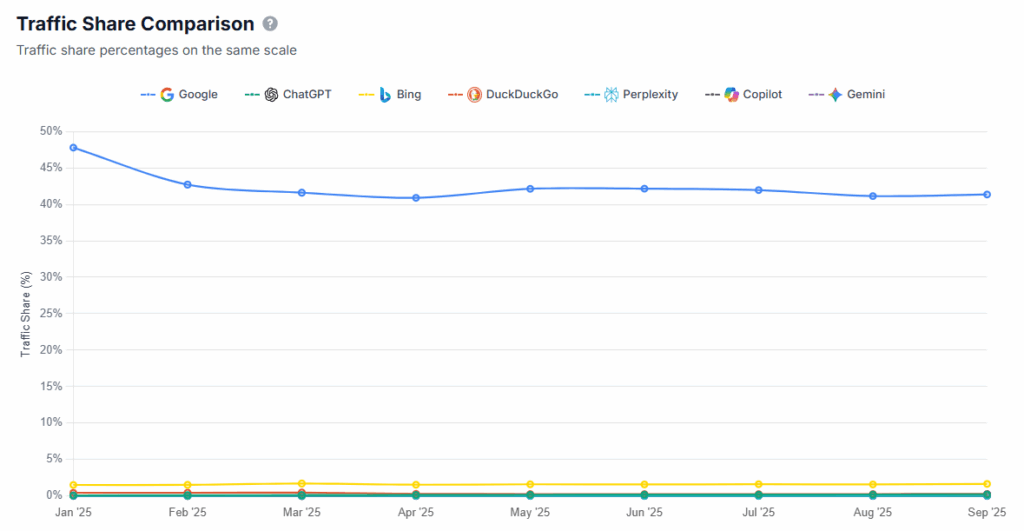

Data from chatgpt-vs-google.com shows that across more than 60,000 sites, Google accounted for over 41% of traffic share in September 2025. ChatGPT drove just 0.24%, with other AI assistants showing even less.

That said, the trend is clear: Google traffic is in decline. Back in January, Google made up 47.78% of referrals across those same sites. By September, that had fallen to 41.35%, which is a drop of around 13.5% in just nine months.

Note – this is global. Across our clients here, Google is sending 50-60% of all traffic, on average. Variants include how powerful the brand is (strong correlation with direct traffic) and how much email and social activity occurs. Overall, though, we are talking about billions of clicks and whilst a drop in Google referral % is a big thing, 41% still equates to a huge number from one source.

Undeniably, it further shows that user behaviour is shifting, however.

For me, this is the balance point. Testing and tracking visibility in AI search is important, but not at the expense of Google. The same good practices boradly tend to help in both ecosystems anyway. It is not about choosing between traditional SEO or AI search, but understanding how they overlap and how users move between them.

It is also worth remembering that the aim of LLMs is not to send traffic out, but to keep users inside their ecosystems. Referral numbers only tell part of the story. People may use AI search to get an instant answer, then still head to Google to validate, compare or take action.

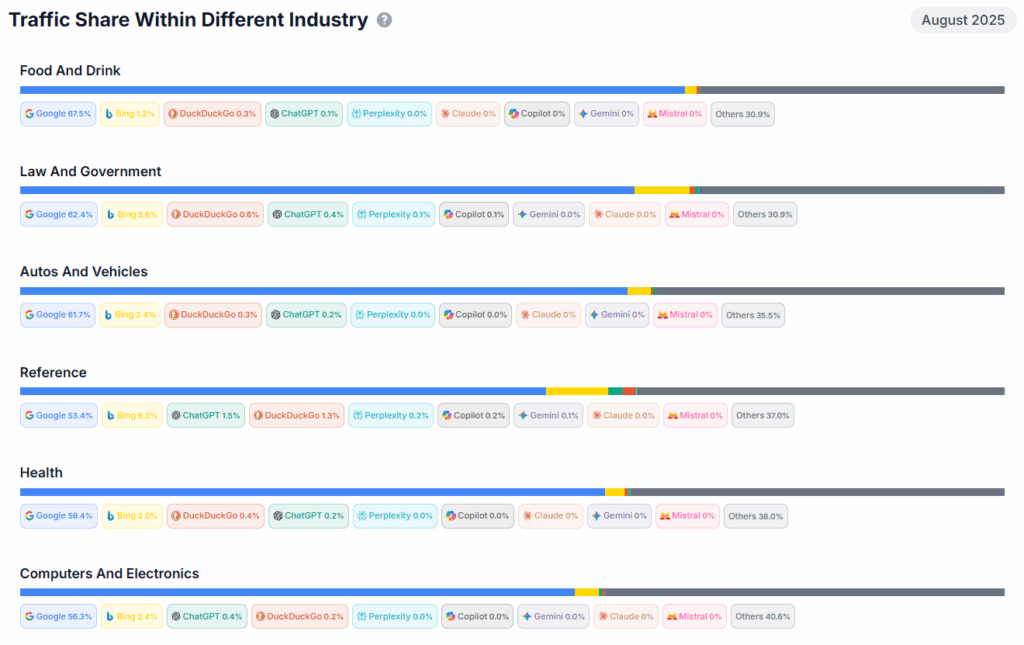

The sector breakdowns are where this gets most interesting. If you are in an industry where GPT actively sends more traffic out, such as law & government, telecoms, science, focusing more energy there sounds logical, but again, not at the expense of Google.

Law has the second highest % of Google referral traffic across all sectors for example. For others, visibility is still important, but you need to set realistic expectations for click-throughs and use this context when analysing performance.

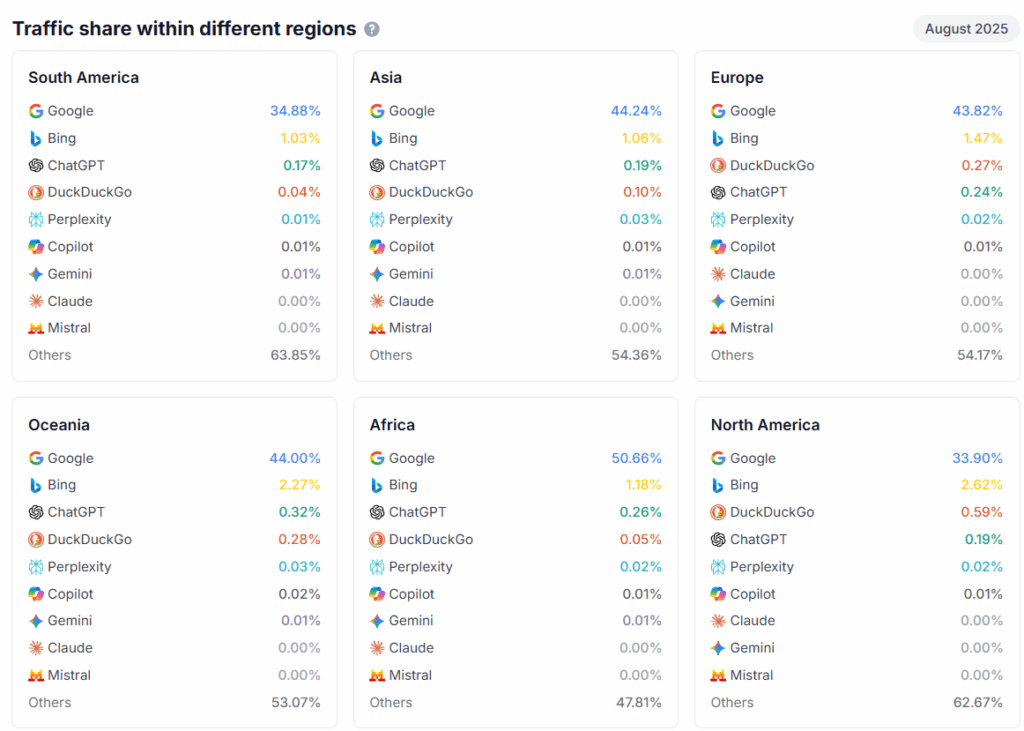

Geographical differences matter too. The same dataset shows big regional variations: for example, Google accounts for nearly 44% of referrals in Europe, but only around 34% in South America. In North America, Bing’s share is notably higher at 2.6% compared to other regions. This means there is no one-size-fits-all strategy. The right balance of effort across Google, Bing and AI assistants will depend on where your customers are based and the behaviours typical of those markets. Nuance is needed when planning your visibility strategy across regions.

Overall, context is key. The question you need to be asking is: how can I be as visible as possible across the mediums that matter most to my customer base?

Why everyone is suddenly talking about “real” experts

Back in February we talked about the importance of expert legitimacy in digital PR, as concerns grew over AI-generated “experts” being used to secure coverage.

Since then, scrutiny has only increased. Recent stories, from the Royal cleaner controversy to reports of agencies pushing fake case studies, show how fragile trust can be in the media ecosystem, especially as AI-generated content scales.

For brands, this is not just about avoiding reputational risk. It is about investing in Experience, Expertise, Authoritativeness and Trustworthiness (E-E-A-T). The State of SEO 2026 report found that nearly 50% of SEO professionals are actively prioritising E-E-A-T reinforcement as a response to the rise of AI content.

For digital PR, the takeaway is clear: campaigns built around expert insights must be anchored in verifiable expertise, credible spokespeople, data-led stories and demonstrable real-world experience.

Media redundancies are reshaping the outlet landscape

Earlier this year, around half of The Observer’s staff took redundancy rather than transferring to the new ownership structure. Some of those journalists have since channelled that into new ventures, most recently, five senior staff launched The Nerve, a publication funded partly by redundancy payouts and hosted on newsletter platform Beehiiv.

This reflects a wider trend: journalists moving away from consolidated legacy outlets towards independent, journalist-owned platforms like Beehiiv and Substack. For PR teams, it means adjusting how you track and build media relationships:

- Track journalists moves closely: knowing where key contacts are heading should be part of your outreach strategy.

- Expand your monitoring: outlets like The Nerve are launching on newsletter platforms first, with plans to scale later. Do not just watch traditional media.

- Invest in early relationships: connecting with journalists at the start of these ventures can help build trust and open up more opportunities down the line.

Stay ahead in SEO with Distinctly

Enjoyed this roundup? Stay updated with the latest Organic trends, tips, and exclusive insights by subscribing to our newsletter.